YOUTUBE VR

I work on several projects involving real time graphics at YouTube including YouTube VR. Starting out, there was no dedicated researcher, so I ran user research sessions myself with the PM.

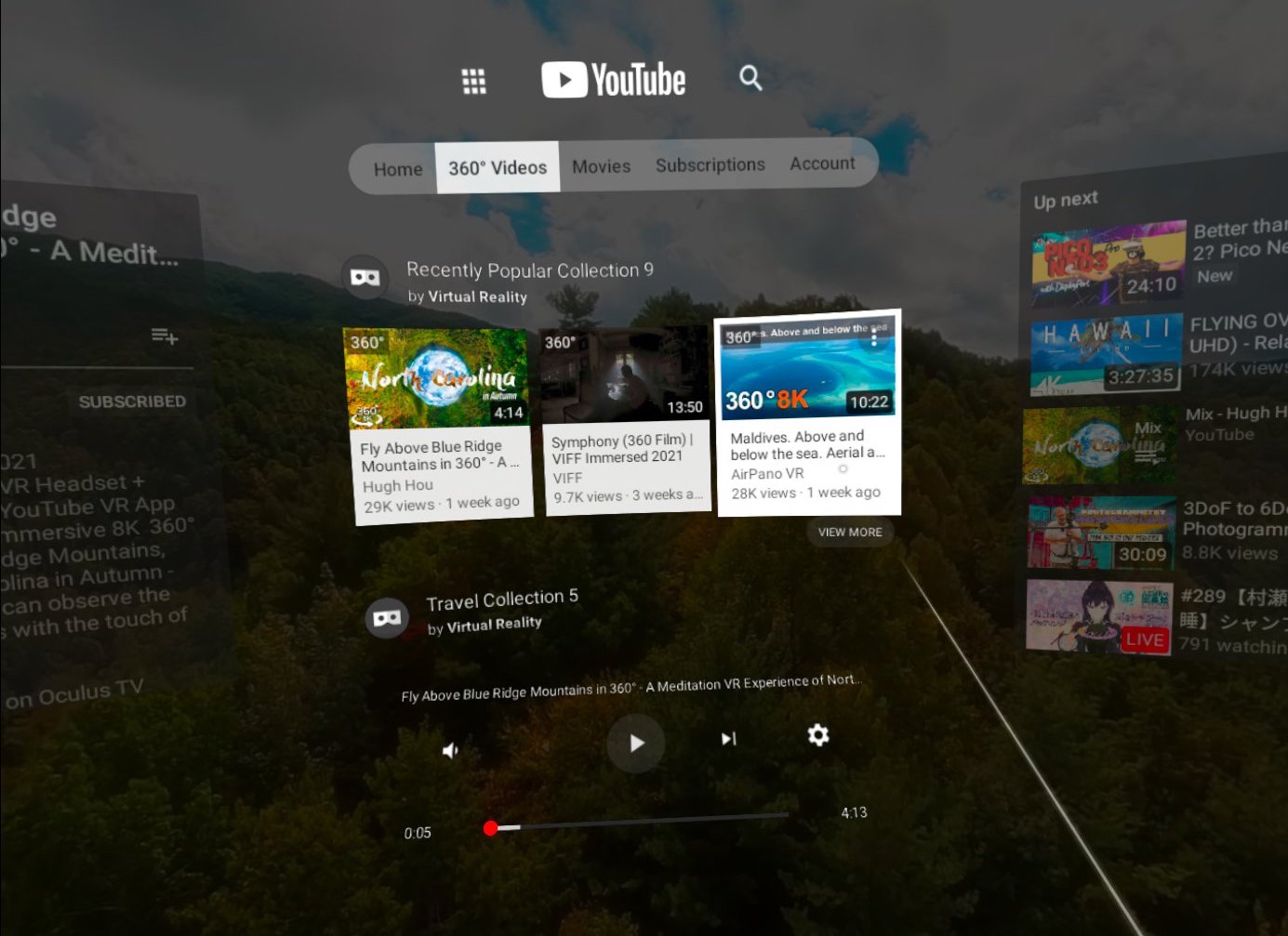

We used diary studies and remote sessions with users to define critical user journeys and determine the project roadmap from feature priorities. Working with engineers, we introduced support for playlists, reading comments, better player controls, a unified dark UI theme, search filters, and a way to browse movies.

Here's a before-and-after with some of the UI changes:

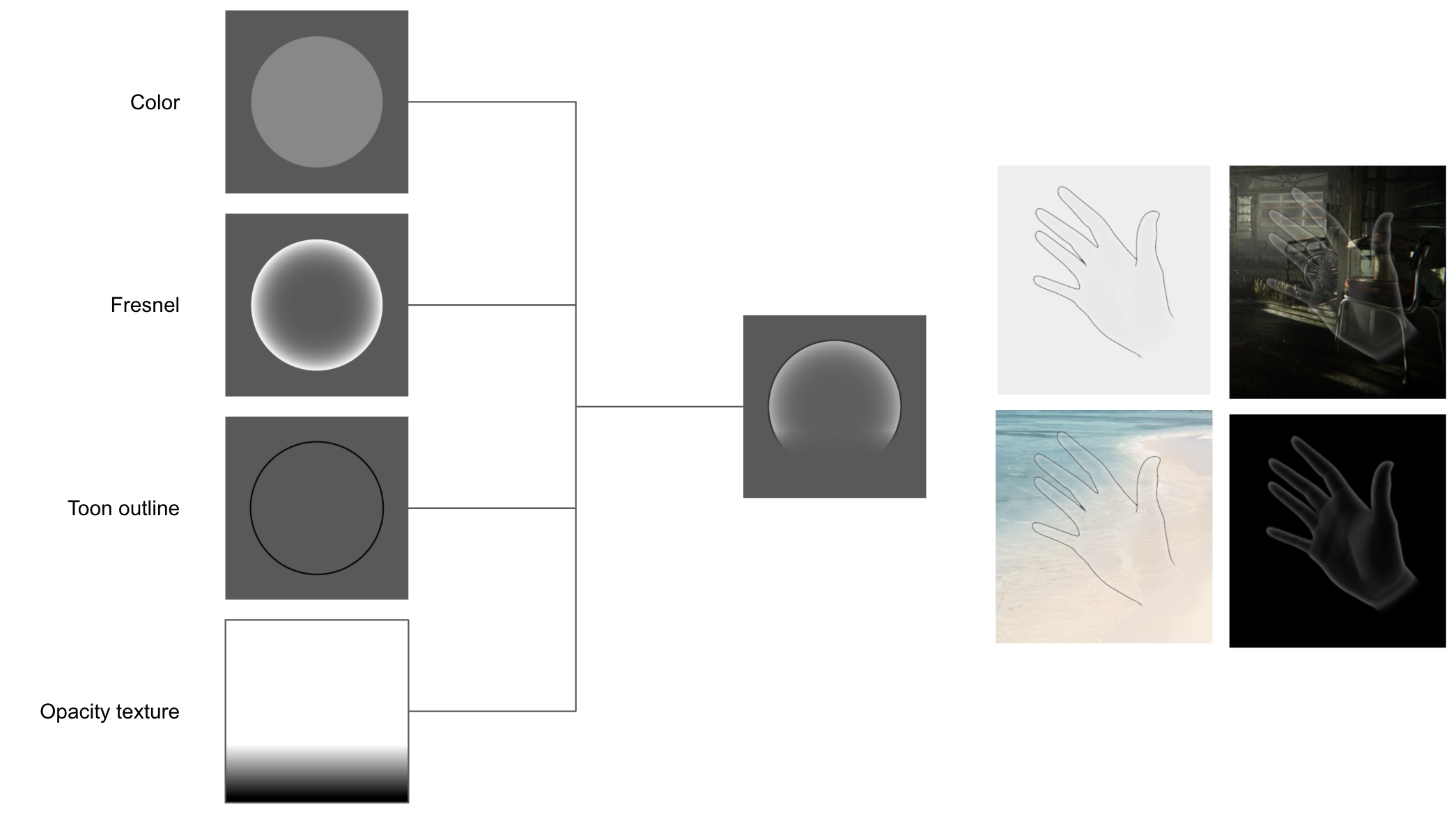

Introducing hand tracking required a lot of special nuance to the visual feedback system as well as tricky things about ray angles, when and how to be accepting user input, and how to make scrolling not terrible. I still have a lot of ways this can be improved.

![]()

Passthrough XR support represents the beginning of XR use cases in consumer devices.

Going forward, there are ways to improve quality of immersion.

I also introduced ways to zoom 360 videos in YouTube's mobile app with subtle haptic feedback and sticky moments for the midpoint and endpoints.

More to come as time goes on in YouTube VR and beyond.